Videogame Autoclassifier

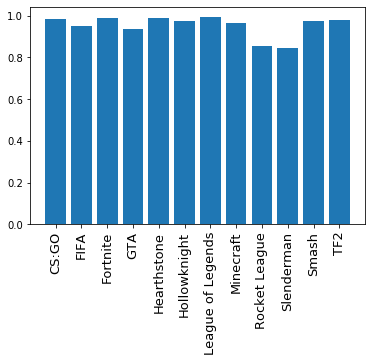

This autoclassifier is a computer vision project I developed with Nikhil Devraj, Victor Hao, Nicholas Kroetsch at the University of Michigan. The app, intended for videogame streamers, uses a convolutional neural network to identify which videogame is currently being played on the computer and updates Twitch stream information accordingly. We achieved an unexpectedly high 99.99%+ accuracy rate discerning between 12 popular videogames. Honestly, the accuracy was so high we were left wondering why Twitch hasn’t implemented automatic game identification for streamers yet.

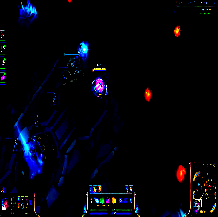

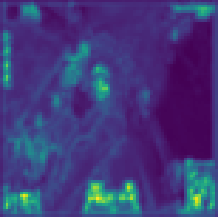

Yeah, these two images have nothing to do with this section and really belong further down the page. They’re here for the pretty pictures.

You can download the paper here. Forewarning: the writing is a bit rushed. If you’re interested in the code, please email me.

The Problem: Streamers Forget to Update Their Game Info

Millions of streamers stream on Twitch every day. All of them must manually enter into Twitch the game they are currently playing, and they must manually update it whenever they switch games. This information is critical to both Twitch and the streamer: if a streamer forgets to update the information, they are miscategorized under the incorrect game and appear in the wrong searches, looking rather unprofessional. This is especially annoying for streamers who are playing through many games in a short period of time, such as when a streamer is demoing small indie games.

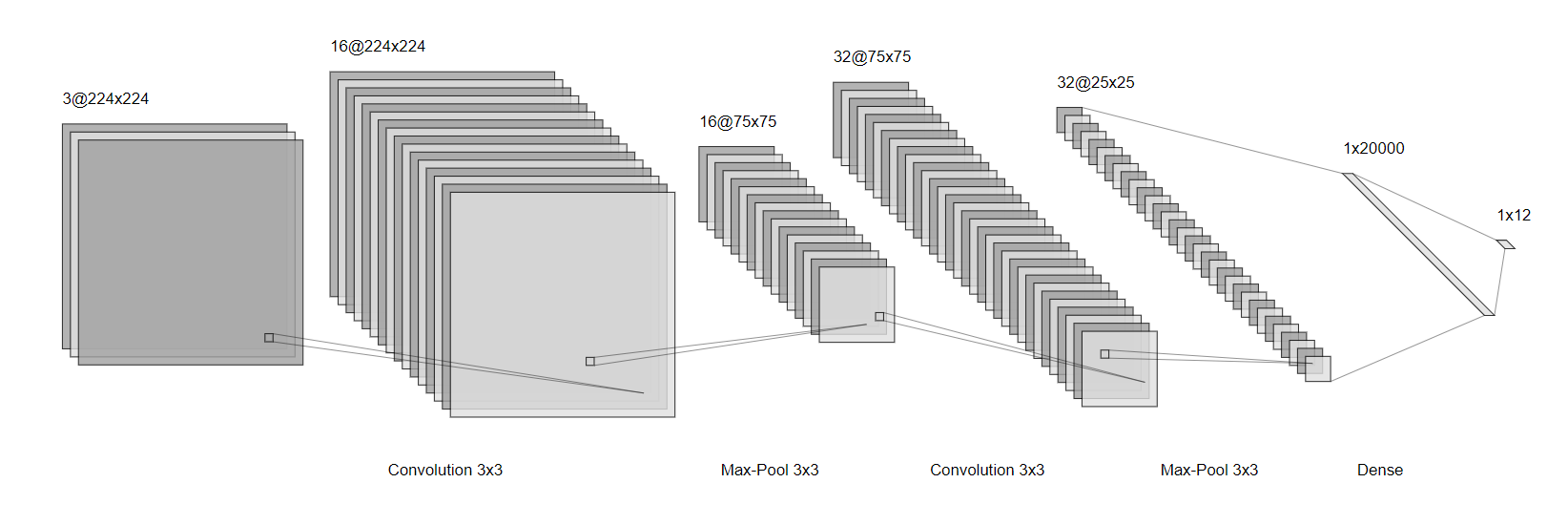

The Solution: Let a CNN Identify Videogames

There is a massive amount of videogame footage on both Twitch and YouTube. My team thought: with such a vast training set, shouldn’t it be feasible to train a convolutional neural network to classify games from screenshots?

After reviewing literature on similar computer vision problems, we decided to base our approach after art style identification, since we hypothesized that the varying art styles of videogames should be salient enough for a CNN to pick up (details and references in the paper). We chose 12 popular videogames of varying genres, art styles, and budgets (League of Legends, CS: GO, Minecraft, Fortnite, GTA V, FIFA ’20, Hearthstone, Super Smash Bros. Ultimate, Rocket League, TF 2, Hollow Knight, and Slenderman). We downloaded 7 hours of footage of each game from YouTube and extracted screenshots every five seconds, for a total of about 5000 images per game.

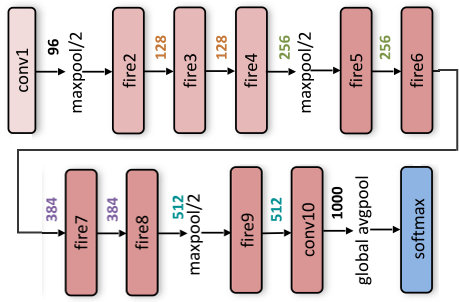

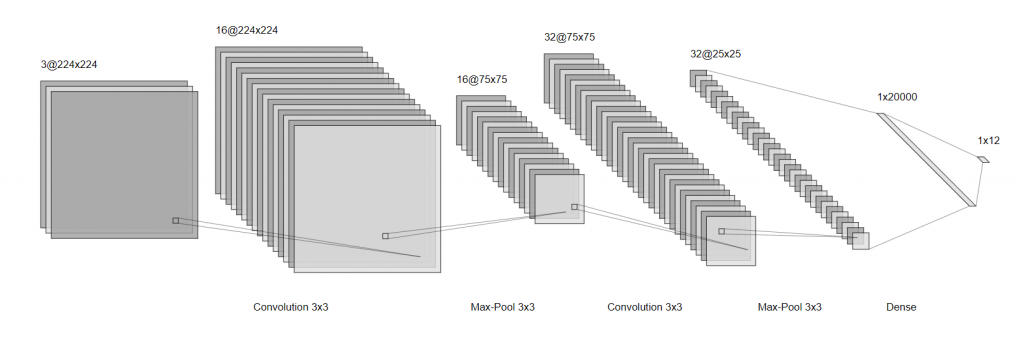

We spent a while adapting a SqueezeNet variant but struggled to tune its classifier to a useful accuracy. As a sanity check, we implemented a simpler 2-layer CNN, and we quickly realized that the simple CNN was more than effective enough. With tweaking, we obtained >95% accuracy on any single image.

More Nines is Always Better

I then wrote a simple python app that takes a screenshot of your computer every few seconds, runs it against the CNN, and then sends an HTTP request to Twitch to update your stream information if necessary. Since the app is able to leverage multiple images over a time interval, the app’s theoretical accuracy skyrockets past an astounding 99.99% – and that’s a conservative estimate.

Cool Analysis

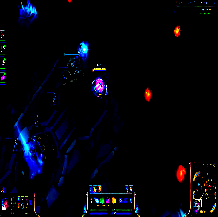

We looked at the activation maps of the CNN layers to see if we could tell what the CNN was picking up on to reach such dizzying accuracy rates. One obvious conclusion was that whenever significant amounts of UI were on the screen, the CNN zeroed in on them. It would be interesting to further investigate what the accuracy of the app is whenever there is no UI or unusual UI (e.g. a pause menu) on the screen.

Press X to Doubt

Although we sincerely believe the accuracy on our project indicates that this would be feasible at scale (our CNN is very small and runs so fast we almost forgot that it’s machine learning), there are a couple of caveats. Actually, just one big one. We only used 12 games; obviously Twitch has many more videogames than that. As the number of games to choose between goes up, the classifier accuracy rate would likely go down, especially considering that there are probably certain sets of games that are visually very similar. For example, some games like RimWorld and Prison Architect share visual assets, while many games share certain game engines with distinct visual and UI styles.

However, mitigating this single-image classification accuracy decrease is the app’s ability to take screenshots over time and leverage multiple guesses into its decision-making process. If there are a large number of games to classify between, we believe it’s likely that false identifications should be split between multiple games, so the correct game should appear dominant to the app based on the relative frequency of the single-image classifications.

Finally, we specifically searched for footage of streamers without facecams. If the app is running locally, then it will be able to take screenshots without facecams. However, if Twitch is running the CNN, then they will have facecams in the images. It’s possible that adding facecams could hinder the classifier, although I think it would only take a little extra work to get the CNN to ignore the face cam. In a worst-case scenario, the app could be made to run locally instead of on Twitch’s servers.

PROJECT INFO.

- COMPLETED AT: University of Michigan

- DATE RELEASED: Dec 2019

- CATEGORIES: Machine Learning

- TAGS: Computer Vision, Convolutional Neural Networks, Videogames